At a Glance

Challenge: Civic AI systems are rapidly becoming more complex and opaque. This presents an urgent challenge for the XAI community to develop explanations for AI systems and their surrounding socio-technical assemblages.

Outcome: Showcased the role of diverse publics can play in generating explanations of civic AI systems and their effects. Designed a generative toolkit that helps conceptualize recommendation for public generated AI explanations.

The Challenge

Existing pursuits towards transparency are overwhelmingly technical and disregard how algorithms interact with broader networks of materials, relations, cultures, institutions, and histories to affect societies in unjust and harmful ways. A meaningful examination of an AI system requires us to engage with the socio-technical assemblages in which it is placed.

There is a need to (1) overcome the epistemic barriers presented by opaque ‘black-boxed’ algorithms, and (2) situate algorithmic systems in broader networks of spaces and environments that affect and are affected by the algorithm

My Role

As lead researcher, I owned the entire research process:

- Research design and participatory methodology development

- Stakeholder recruitment across 5 diverse community groups

- Workshop facilitation (led all 8 workshops)

- Workshop documentation (supported by 2 fantastic colleagues for note-taking and picture-taking)

- Mediation toolkit design (interactive mapping setup and take-away materials)

- Qualitative analysis (situational analysis, thematic analysis)

- Writing and presentation

Research Process

Research Through Design Approach

I employed a 'research through design' methodology, using the process of designing and facilitating participatory workshops to investigate how we can generate explanations of broad socio-technical AI systems.

Phase 1: Pilot Studies (Apr 2023)

Conducted 2 pilot workshops at Georgia Tech Demo Day and GVU Research Showcase with ~20 participants to test initial concepts.

Key learning: Participatory mapping and "speculative personation" (asking participants to make predictions as an AI would) effectively prompted critical questions and embodied discomfort with algorithmic decision-making.

Phase 2: Workshop Design & Preparation

- Developed workshop protocol based on "good enough explanations" framework

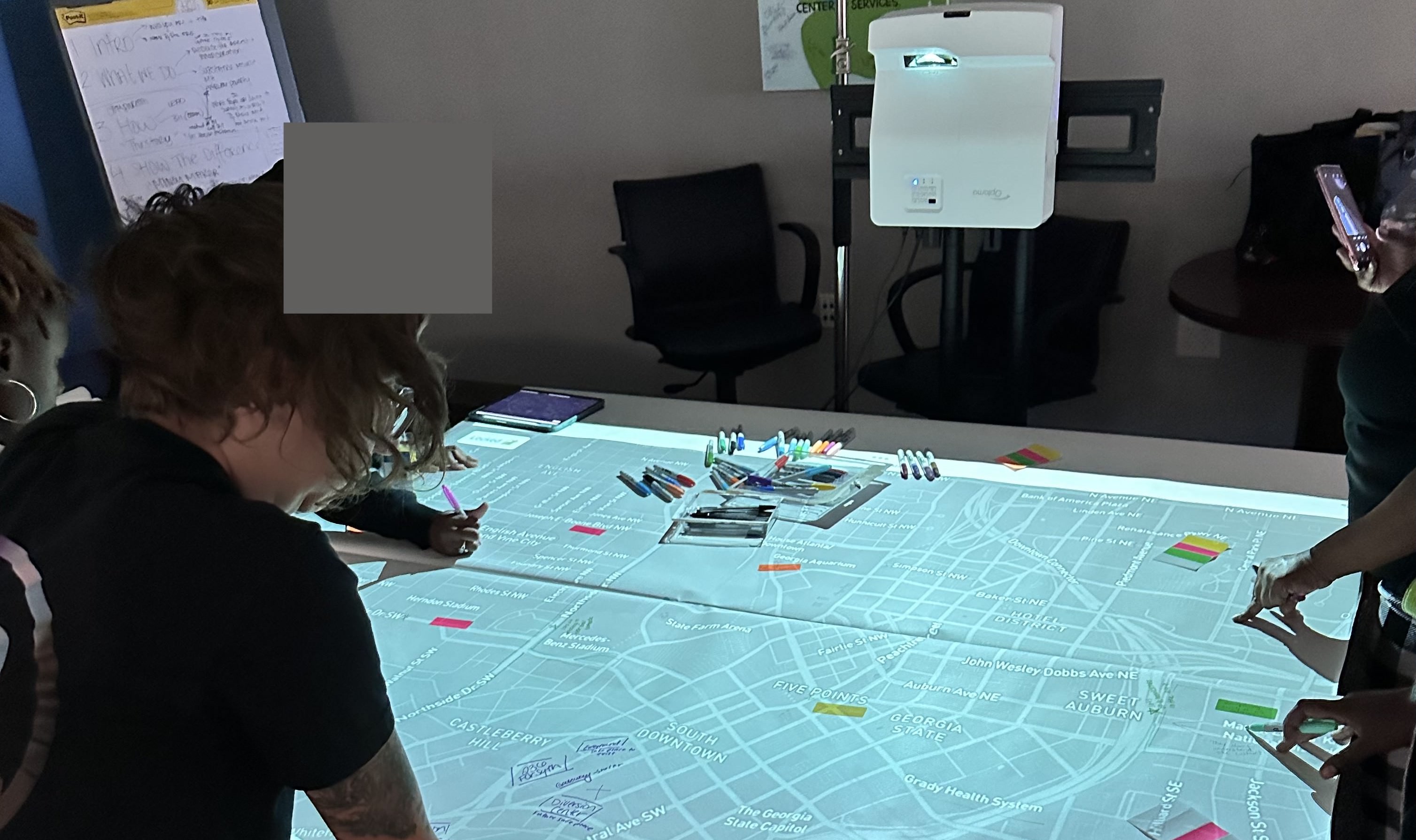

- Created interactive mapping setup using MapSpot projection table

- Designed pre-workshop survey to understand participants' familiarity and goals

- Created take-away toolkit documenting key questions about AI systems

Phase 3: Participatory Workshops (Jun 2023 - Jan 2024)

I conducted 5 workshops with diverse stakeholder groups in Atlanta, GA, bringing together different perspectives on predictive policing and algorithmic systems:

Police Reform Group

Organization supporting people experiencing poverty, substance use, mental health concerns

City Planners

Regional civic planning agency using data-driven methods

Community Leaders

Open call: neighborhood leaders, violence prevention workers, civic researchers

Development Organization

Organization funding local community-centered projects across Georgia

Educators

Teachers from nonprofit focused on educator collaboration

Workshop Structure (90 minutes)

- Speculative personation (30 min): Participants identified neighborhoods on a projected map where they believed a predictive policing algorithm might flag as "crime hotspots"

- Reflection & questioning (45 min): Guided discussion exploring their reasoning, the AI system's potential logic, and social/political implications

- Toolkit walkthrough (15 min): Introduced take-away materials for continued investigation

Workshops were audio/video recorded. I also gathered pre-workshop surveys and post-workshop feedback.

Participants engaging with the interactive mapping toolkit during workshops

Phase 4: Analysis

I analyzed workshop data using multiple qualitative methods:

- Situational analysis: Mapped the socio-technical assemblages participants identified

- Thematic analysis: Coded transcripts for patterns in how participants related to AI

- Interaction analysis: Examined how mapping mediated collective sensemaking

- Design analysis: Identified which toolkit features supported which types of inquiry

Key Research Findings

Finding 1: Locals Possess "Partial Explanations" of AI Systems

While my initial goal was to identify key dimensions and related questions where explainability is desired, I found that local publics are not just in need of AI explanations, but they are also well positioned to partially explain how algorithms interact with society to affect local contexts.

Design implication: Design tools and features that collect, maintain, and organize public generated partial explanations of AI systems.

Finding 2: Users Relate to AI Through Four Domains

When attempting to understand and explain AI systems, participants drew on their relationships with:

- Prediction domain: The service area (policing, education, housing)

- Prediction subject: The people/places predicted (their own neighborhoods)

- Predictive backdrop: Local and global environment (city politics, history)

- Predictive tool: The AI system itself

Design implication: Consider how tools can help locals identify their expertise within these domains, thereby prompting them to generate grounded AI explanations.

Finding 3: Participatory Mapping Can Generate Partial + Local AI Explanations

The mapping interface, and supporting workshop protocol, successfully provided:

- Site: Invited workshops were curated to reach a diverse set of stakeholders (and their explanations).

- Media Mapping helped grounded discussions in familiar places of which public consider themselves experts.

- Interactions: Speculative Personation helped embody the AI tool and consider its varied features.

Method Reflections

What worked:

- Participatory workshop format created rich collective meaning-making

- Tangible mapping activities grounded abstract AI discussions in concrete local contexts

- Video analysis allowed for rich analysis

Challenges:

- Self-selected workshop participants may miss perspectives of those less willing to participate

- Findings specific to predictive policing context; applicability to other domains/geographies unclear

- Broad rather than deep engagement; sustained partnerships might reveal different patterns

Impact & Outcomes

Future Work

- Conduct longer-term engagement (multiple sessions) to track how understanding deepens

- Include more direct collaboration with city officials using these systems

- Expand to other types of civic AI beyond predictive policing

- Build digital version of toolkit for broader distribution

Related Publications

1. Good Enough Explanations: How Can Local Publics Understand and Explain Civic Predictive Systems? Shubhangi Gupta. PhD Dissertation. Georgia Institute of Technology.

2. Making Smart Cities Explainable: What XAI Can Learn from the "Ghost Map". Shubhangi Gupta, Yanni Loukissas. Late Breaking Works paper at CHI 2023.

3. Mapping the Smart City: Participatory approaches to XAI. Shubhangi Gupta. Doctoral Consortium at Designing Interactive Systems (DIS) 2023.